Prompt Engineering: How to Talk to AIs like ChatGPT?

It’s challenging to meet someone who hasn’t heard about GPT and other similar models this year. These Large Language Models (LLMs) signify a groundbreaking shift in the domains of machine learning and artificial intelligence. A field that remained obscure for most of its history is now an integral part of daily life for a vast segment of the global population, with tools like ChatGPT.

As a researcher dedicated to this field for over four years, I have extensively used these tools, particularly this year. This journey has greatly deepened my understanding of LLMs and the art of prompt engineering. Consequently, this article serves as a primer on prompt engineering, delving into the array of techniques used to control LLMs.

What are prompts and prompt engineering?

Prompt engineering is the strategic creation prompts for pre-trained models like GPT, BERT, and others; prompts describe what we request the model to do. This process aims to steer these models towards generating a specific behavior that we seek. Successful prompt engineering hinges on meticulously defining the prompt with appropriate examples, relevant context, and clear directives. It demands a profound understanding of the model’s underlying mechanisms and the nature of the problem at hand. This knowledge is crucial to ensure that the examples incorporated in the prompt are as representative and varied as possible, closely mirroring the real-world distribution of input-output pairs that characterize the problem.

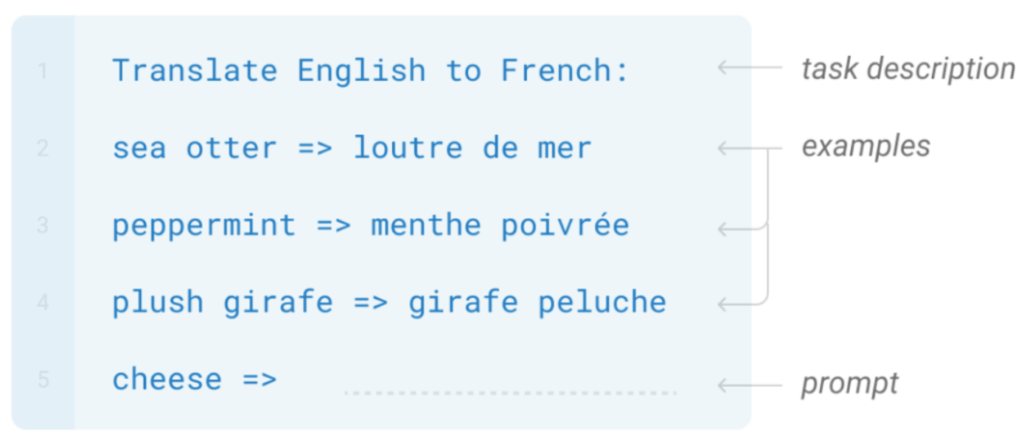

Consider the simple task of translating text from English to French. Achieving this through prompt engineering is remarkably straightforward. One needs a pre-trained model, such as GPT-4, and a well-crafted prompt. This prompt should 1) outline the task 2) provide a few example sentences with their translations 3) include the specific sentence requiring translation, as demonstrated in the figure below. That’s it! GPT-4, already trained on an enormous corpus, inherently grasps the concept of translation. It merely requires the correct prompt to apply its learned skills.

Zero, one and few shot prompts

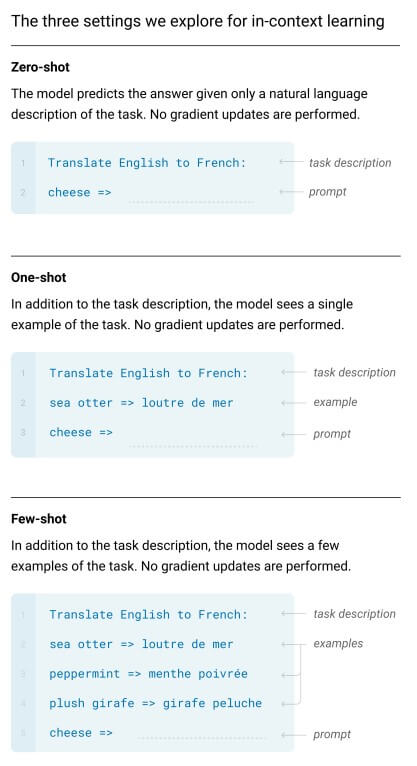

Prompts can be classified in various ways. Take, for instance, zero-shot, one-shot, and few-shot prompts, which correspond to the number of examples provided to the model for task execution. In zero-shot settings, the Large Language Model (LLM) receives only the task description and input. For example, in the figure, we ask it to translate ‘cheese’. These zero-shot prompts already demonstrate impressive performance, as evidenced by this particular paper.

Despite their efficacy, I generally avoid zero-shot prompts for a couple of reasons. Firstly, adding just a few examples can significantly enhance performance, and you don’t need many, as highlighted in another paper. More crucially, by incorporating a few examples, you not only clarify the task for the model but also illustrate the desired response format. In zero-shot translation, the model might respond with, “Sure, here is the translation of your text…”. However, with a few-shot approach, it learns more effectively that a text followed by “=>” indicates that the subsequent content should directly be the translation. This nuance is useful, especially when seeking to precisely control the model’s output for commercial applications.

Dynamic prompts

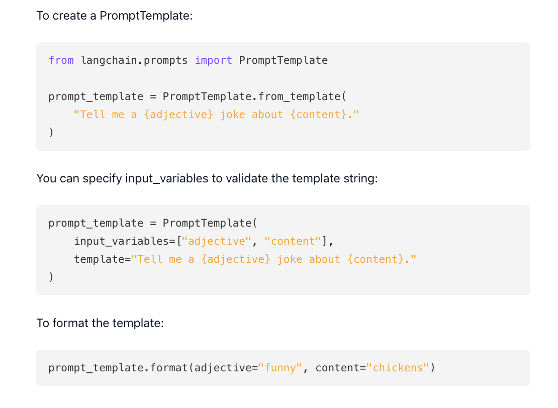

Utilizing tools like langchain , we can also create dynamic prompts. In the example mentioned earlier, this means that ‘cheese’ becomes a variable, alterable to any word we wish to translate. This seemingly straightforward concept paves the way for complex systems, where parts of the prompt are either removed or added in response to user interaction.

For instance, a dynamic prompt for a chatbot might incorporate elements from the ongoing conversation with a user. This approach enhances the bot’s ability to understand and react more appropriately to the context of the discussion. Similarly, a prompt initially designed for text generation can be dynamically adapted to revise a given text, ensuring it aligns with previously generated content. This flexibility allows for more nuanced and context-aware interactions, significantly enriching the user experience and simplifying developments.

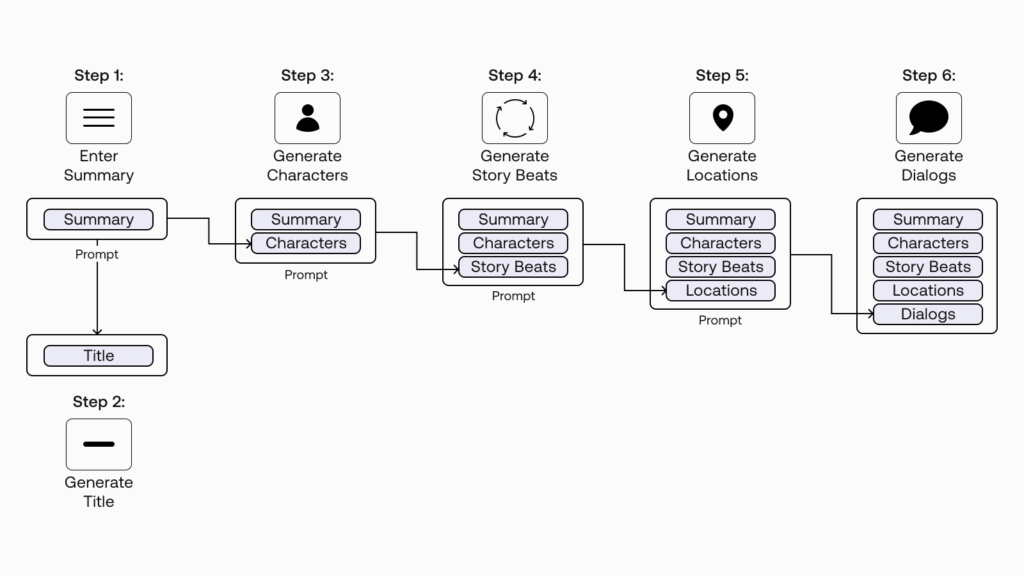

Prompt chaining

Prompts can be employed sequentially; a technique known as prompt chaining. In this method, a prompt used to respond to a user query might incorporate the summary of a previous query as a variable. This summary itself could be the output of a separate prompt. This layered approach allows for more complex and context-aware responses, as each prompt builds upon the output of the previous one.

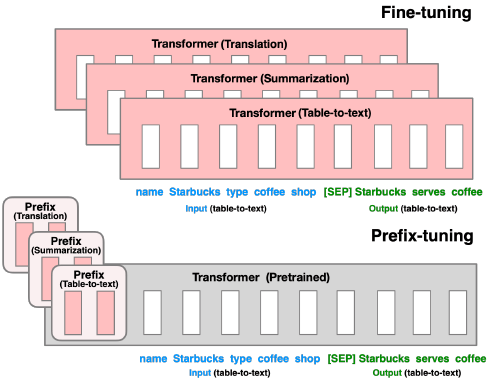

Continuous prompt

This is a more sophisticated approach which uses the fundamentals of LLMs. Prompts consist of words, and these words are processed by Large Language Models (LLMs) through word embeddings, which are essentially numerical representations of words. Consequently, rather than solely relying on textual prompts, we can employ an optimization algorithm like Stochastic Gradient Descent directly on the prompt embedding representation. This method essentially refines the input, as opposed to fine-tuning the model itself. For exemple in this article, they enhance the model’s performance by concatenating a fine-tuned prompt with a standard prompt.

Only-shot prompt?

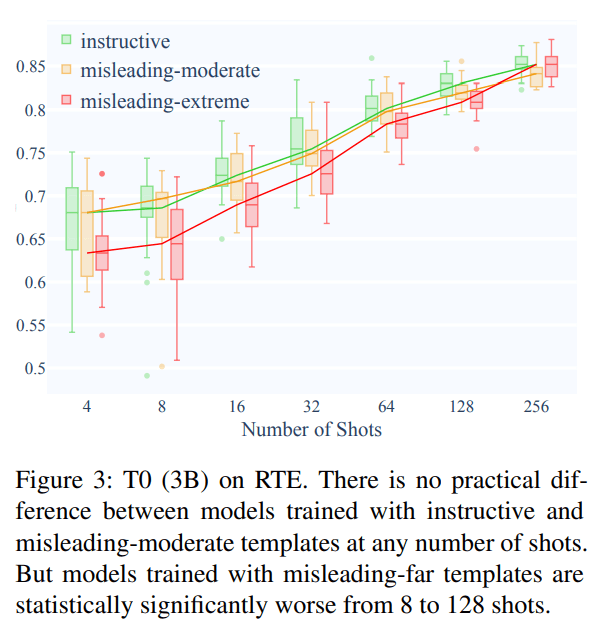

This method, while not officially named, stems from insights in a research paper which posits that task descriptions and directives in prompts are largely useless. The paper illustrates that prompts can contain important, irrelevant or even contradictory information without significantly impacting the outcome, provided there are sufficient high-quality examples. This was a lesson I learned through experience, prior to discovering the paper. I used to craft complex prompts, laden more with directives and task descriptions than examples. However, at some point I experimented with using only examples, omitting directives and task descriptions entirely, and observed no notable difference. Essentially, my detailed instructions were superfluous; the model prioritized the examples. This can be explained because the exemples are more isomorphic to the final output to generate. The model’s attention mechanism, thus, focuses more on examples than on any other aspect of the prompt.

Chain of thought prompts

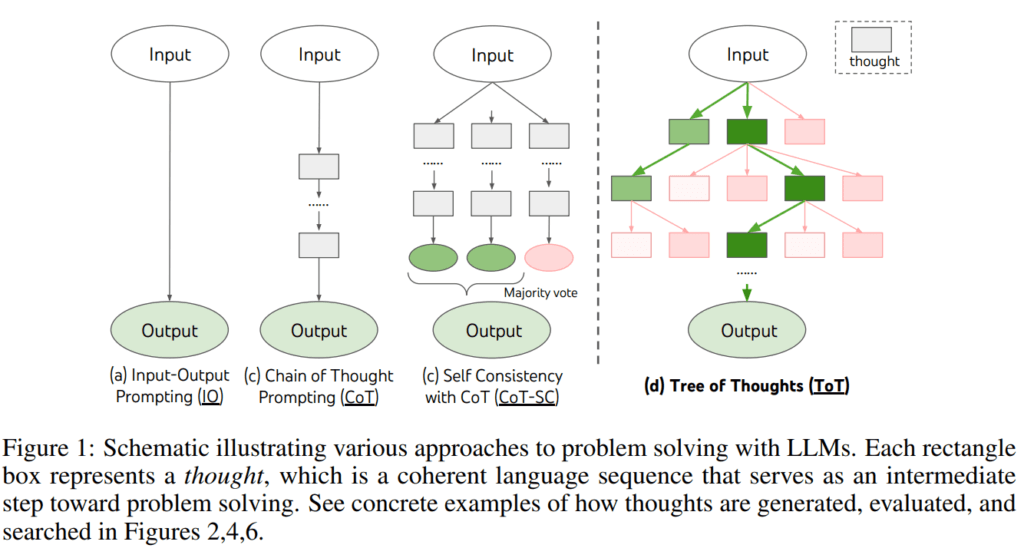

Chain of Thought (CoT) prompts involve structuring examples not as simple “X -> Y” transformations but as “X -> Deliberating on X -> Y”. This format guides the model to engage in a thought process about X before arriving at the final answer Y. If you’re curious about the nuances of this approach, there’s a detailed paper on the subject.

However, it’s crucial to remember that most Modern Large Language Models (LLMs) are autoregressive. This means that while the “X -> Deliberating on X -> Y” structure is effective, a format like “X -> Y -> Explain why Y is the answer” is less so. In the latter case, the model has already determined Y and will then concoct a rationale for its choice, which can lead to flawed or even comical reasoning. Recognizing the autoregressive nature of LLMs is essential for efficient prompt engineering.

Further research has expanded on the CoT concept. More sophisticated strategies include self-consistency, which generates multiple CoT responses and selects the best one (paper here), and the Tree of Thoughts approach which accommodates non-linear thinking, as explored in several papers (see 1 & 2). These advancements underscore the evolving complexity and great potential of prompt engineering.

More & more

The world of prompting techniques is rapidly evolving, making it a challenge to stay current. While it’s impossible to cover every new development in this article, here’s a quick overview of other notable techniques:

- Self-ask: This method trains the model to ask itself follow-up questions about specific details of a problem, enhancing its ability to answer the original question more precisely.

- Meta-Prompting: Here, the model engages in a dialogue with itself, critiquing its own thought process, aiming to produce a more coherent outcome.

- Least to Most: This approach teaches the model to deconstruct a complex problem into smaller sub-problems, facilitating a more effective solution-finding process.

- Persona/Role Prompting: In this technique, the model is instructed to assume a specific role or personality, altering its responses accordingly.

Through this article, I hope to have introduced you to some of the more innovative and lesser-known prompt engineering techniques. The creativity and ingenuity in current research indicate that we are just beginning to uncover the full potential of these models and the prompts we use to control them.

- Why non linearity matter in Artificial Neural Networks - 18 June 2024

- Is this GPT? Why Detecting AI-Generated Text is a Challenge - 7 May 2024

- The Transformer Revolution: How AI was democratized - 21 February 2024