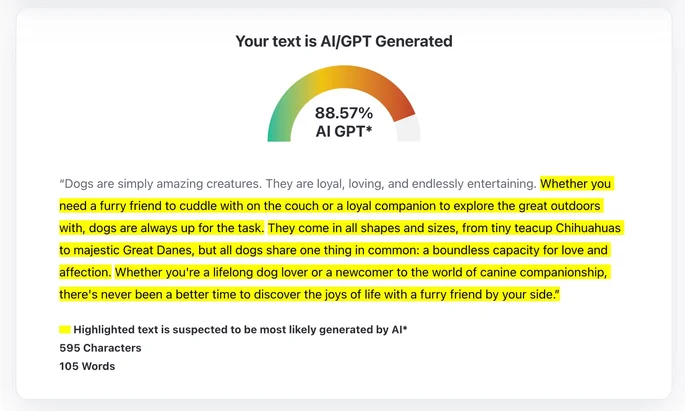

Can we spot text written by AI? While there are tools designed to detect AI-written text, they often fall short, especially with sophisticated models like GPT-4 (source). Many experts in the field have begun to dismiss these tools as “mostly snake oil” (source). Even OpenAI, the creators of GPT-4, have discontinued their own AI detection tool. They admitted that it performed poorly (source). So, what makes it so challenging to detect AI-generated text? And what can we do about it? Let’s dive into these questions.

Why is it so hard to detect if a piece of text is written by an AI?

In essence, detecting AI written text requires a more advanced AI. If such an AI exists, it would likely be used for text generation too. Furthermore, tools like Quillbot or Wordtune can refine AI-generated content to make it almost indistinguishable from human writing (source). Thus, we’ve reached a point in an arms race where text generators come out on top.

The only scenario where an AI might reliably identify human-written text is when it contains obvious spelling errors or unique stylistic quirks; although an AI could simulate these traits as well. On the other hand, texts that are very precise and formal can be mistakenly identified as AI-generated. Hence, AI detection tools can produce a large amount of false positives and false negatives in their diagnostic. Thus, it is hard to trust them.

Interestingly, you might think that an advanced AI like GPT-4 could easily spot text written by its predecessor, GPT-3.5. This is likely to be true. However, how can you be sure a text was written by GPT-3.5? Of course, if you knew, you could have more trust in the result of the test. But if you knew you wouldn’t be testing. Thus you don’t know and you can’t trust the results of the test. This is an impossible problem. Then, is there any way we can detect AI generated text? No, we can’t for now but we have ideas.

Legal solutions for AI detection

Under the Article 52 of the AI act freshly voted in the EU parlement :

The AI system, the provider or the user must inform any person exposed to the system in a timely, clear manner when interacting with an AI system, unless obvious from context

artificial intelligence act

In other words, once the AI act is implemented in Europe, any mails, messages, or other documents written by an AI must inform the user of that fact. China, the USA, the G7 are also working towards the identification of AI-Generated content.

This will surely help a lot but there will also be bad actors. If we can’t prove that something is written by an AI through testing, we can’t apply legal pressure if it is not mentioned. Yes, while this law is a good idea, in practice it is difficult to enforce. Moreover, while article 52 will surely change the behaviour of companies, individuals may not follow such instructions. Yes I am thinking of that master student writing his thesis entirely with GPT but other situations may apply as well.

Technical solutions for AI detection

Once again, technology may save us all. In a recent paper, researchers at the University of Maryland have defined a way to “watermark” AI generated text. Watermarking is the process of transforming an output by adding an unerasable element proving its source. It is generally used for copyright protection but in this case it is used to help AI detectors.

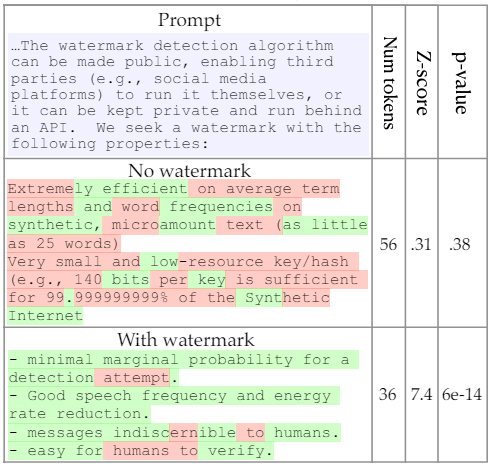

How can we watermark text? The paper proposes to define a random list of ‘secret’ words. Then, when generating text, you can force the model to use these words more than it would normally do. It is then obvious if a text has been written by AI.

The figure below shows two AI generated text, one with no watermark and one with it. We observe that that model with no watermark has a p-value of 0.38. This means that the AI detector thinks there is a 38% chance this text is not written by AI. With watermark (more green words) the p-value is basically 0. In this case, the AI detect is certain that the text is AI.

The problem with this solution is that anyone who knows which are these green words could replace them. Thus, in practice, we could imagine a more dynamic solution. For exemple, this list of green words could change after each word generated. Such technique could be developed to a reliable standard. Then, big AI models could be are forced to use such kind of watermarks in the future.

Conclusion

In conclusion, no, we can’t detect AI written text. At least not reliably enough to enforce laws or punish lazy students using AI to fully write their master thesis. Nonetheless, we could develop technique to mark AI written text and write laws to enforce their use. As it stands now, this looks like the simplest solution.

- How RLHF works for LLMs : A Deep Dive - 1 July 2025

- Attention Mechanism in LLM Explained : A Deep Dive - 27 May 2025

- Tokenization in LLMs: Why Not Use Words? - 6 March 2025