Natural Language Processing (NLP), an introduction

Natural Language Processing (NLP) is the study of human language through computers. It is often considered a subfield of Artificial Intelligence (AI) but draws a lot from linguistics. In our life, NLP is all around us : Google search, spam mail filter, copyright infringement detection, auto-completion, and automatic translation are all examples of NLP applications. It also happens to be my field of study and so I wish to give you a bit of perspective about this incredible technology.

A bit of history

Some argue that AI started with NLP in 1954 with the Georgetown experiment. This experiment consisted in translating 60 Russian sentences into English and it was somewhat of a success. In 1960, SHRDLU was the first language-controlled program, it allowed simple conversations with its user in a world of blocks. All these advances seemed to show that AI and NLP would become very important in the coming decades.

However, this early success was misleading. Scientists of the time quickly realized how difficult the task really was. Not just automatic translation but everything else that AI & NLP were supposed to solve. This was the beginning of the AI winter. An era during which the evolution of many of AI was slowed and under-financed. This is a long and complex period in which AI attracted a bad reputation in the industry and academia. Here is another article of mine on the subject.

After decades of research, AI & NLP had gained in maturity. But it was only in the 2010s that it regained its reputation. In 2011, Watson (an IBM AI system) won the Jeopardy, a TV show, by defeating the best humans. Apple, Google, and Amazon soon followed with the creation of their virtual assistant such as Alexa and Siri. Automated translation was becoming really good and many other problems came to be solved and since then AI is on the rise again and so is NLP.

Examples of NLP tasks

Whenever we are trying to systematically and automatically extract or manipulate information from text through a computer, we are doing NLP. This goes from simple task such as Part-Of-Speech tagging which assign a label such as verb, adjective, pronoun, etc to each word of a text. Or Named Entity Recognition where the goal is to find words or sequences of words that refer to entities such as people, objects, products and other concepts in a text.

There are also more complex task such as Sentiment Analysis which assigns a polarity or subjectivity score to text such as comments to understand the feeling and reaction of people. Or Question-Answering systems such as chatbots that can sometimes provide believable levels of conversation. Or automatic summarisation which can automatically summarizes a text of several pages in some paragraphs.

They are many many more task of NLP and if you are interested, here is a non-exhaustive list of the most important ones. Most of them are only partially solved because NLP is still quite a young and developing field.

The next step

The current NLP technology has a lot of potential but is also scratching the surface of what is possible. Past research work focused mainly on a lexical and superficial analysis of words paying little attention to deeper meaning. Understanding grammar and syntactic rules is certainly important but it is not what language is about.

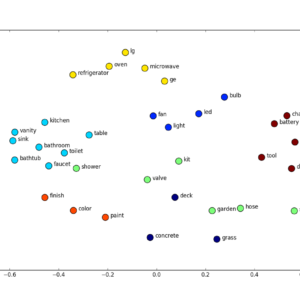

The future of NLP lies in the semantic understanding of text. A word is not just a sequence of letters but a textual representation of a reality, whether abstract or physical. To understand that the word “pizza” is food and that therefore it must have a recipe and a country of origin is easy for us humans. And this is a problem because we do not understand how we understand and yet we do it so effortlessly. Thus, the next step for NLP is to pass on this semantic knowledge of language to machines so they can understand the world behind the words.

- Why non linearity matter in Artificial Neural Networks - 18 June 2024

- Is this GPT? Why Detecting AI-Generated Text is a Challenge - 7 May 2024

- The Transformer Revolution: How AI was democratized - 21 February 2024