This article is the first in a series in which I will attempt to explain neural networks as simply as I can. Artificial Neural Networks are currently the most ubiquitous and most complex tools in the field of Artificial Intelligence. In many cases, they can have millions of neurons and be taught to solve tasks with superhuman accuracy. Examples include playing chess or helping doctors with diagnostics. In this article, I will explain with simple examples how Neural Network make decisions.

Why do we use Neural Network

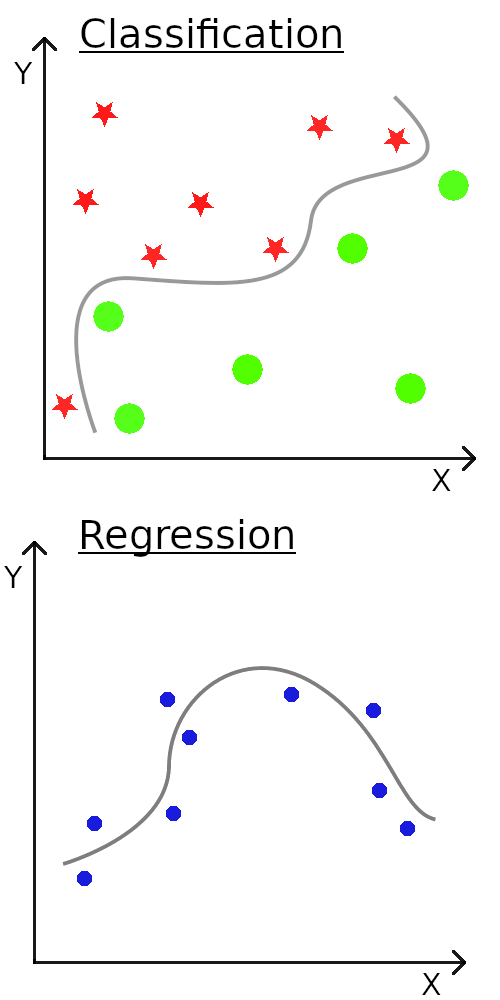

A good way to understand a tool is to understand what it does. For neural networks, there are two main tasks : classification and regression. We will focus on classification.

Classification consists in deciding what is the type of something. For example given a bunch of mails, decide which is spam and which is not or given an image of an animal, decide if it is an image of a cat, a dog,or something else. Mathematically, this means drawing a boundary between two or more sets of points.

If each point represents a mail then its position is determined by some dimension we have measured such as the quality of writing both grammar and spelling and whether the sender is known. What to measure and how to measure is a difficult task in itself called feature engineering. Check out my article on word embedding for an example of common Neural Network input.

A Neural Network

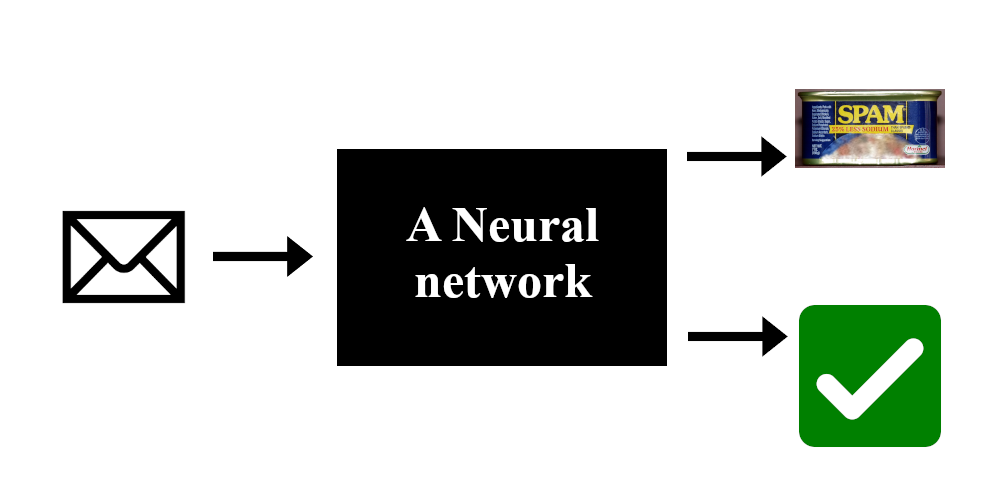

Let’s say we want to classify mails as either spam or not. What we want is a Neural Network that takes in some input representing the mail in some way (quality of writing ,authenticity, style, content, title, …). Then from this input decide if it is spam or not.

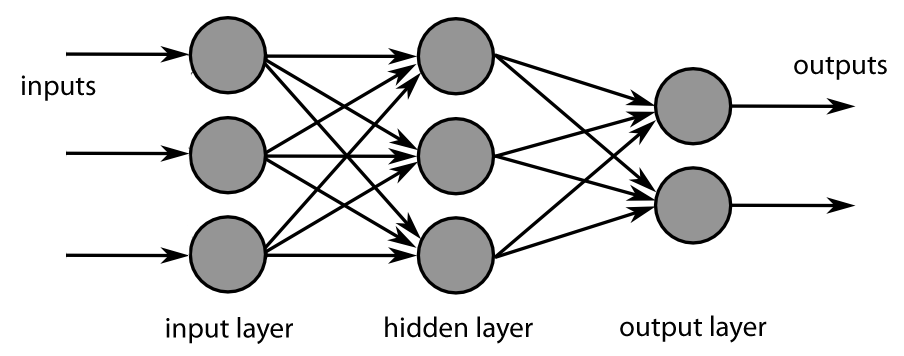

A neural network in its simplest form is a bunch of neurons arranged into layers and connected with each other. The input flows through the network from left to right and comes out the other end with an answer.

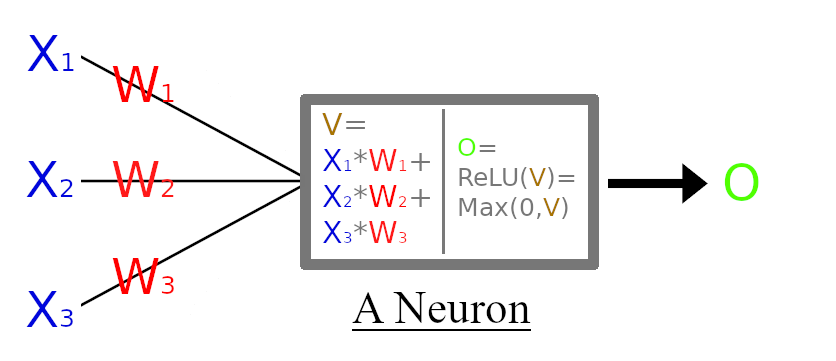

Each neuron (except in the input layer) takes the values outputted by the neurons from the previous layer and multiplies each by so called weights which represent the strength of the links between neurons. After that, we sum these values, and the results is passed to an activation function which gives the final output of the neuron. Activation functions are not too important here, I will explain them in another article. This final value will itself either be used by the next layer or will be part of the final output of the network.

Neurons are organised into layers. The input layer only acts as storage for the input values, the hidden layers transform the input and the output layer makes the final decision. Each layer except the first takes as input the results of the neurones from previous layer and output the result of each of its neurons. Therefore, the information flows through the network from left to right and is transformed into an answer.

The inner working

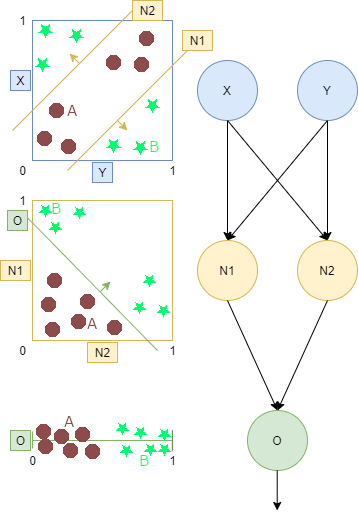

What is really happening in a network? Let’s take a simple 2x2x1 network and a set of points representing mails. We have two inputs (X,Y), two hidden neurons (N1,N2) and one output neuron (O).

The input (X,Y) forms a 2D space. X and Y can be anything we want that is measurable (quality of writing ,authenticity, style, …). Looking at the image on the right, the input space is in the top left. Let’s define the red circles as good mails while the green stars as spams.

We can imagine each point of the input space (X,Y) passing through the hidden layer and producing a new space (N1,N2), also called latent space. This new space is produced as each of the two hidden neurons draws a line in the input space.

The red circles in the input space are separated from the green stars by these two lines. Both lines have an arrow that points away from the red circles. This means that for either N1 or N2 the green stars have a higher value that the red circles.

We can see how the points A and B moved with this transformation. The red circles are moved to the bottom left since they have low value for both neurons while green stars are moved to the right or the top since they result in a high value for one neuron.

The last output neuron transforms this latent space into a 1D space since the output layer has only one neuron. Similarly, this means we draw a line in the latent space separating once again the red circles(good mail) and green stars (spam mail).

At the end we have a score of how much the network think the input represents a spam mail. Indeed, green stars have a higher score than red circles. Note that this is fictitious example and in reality the data is much more complex and so is the neural network.

Conclusion

In this article I explained how Neural Networks make decisions. But Neural Networks need to be trained in order to learn to make good decisions. In the next article of this series, I will explain how networks are trained.

- How to Setup Azure SSO with FastAPI: A Complete Guide - 18 October 2025

- Why is the AI revolution so slow? (It’s not) - 18 September 2025

- How Does LLMs Store Knowledge? A Deep Dive Into Feature Superposition - 15 August 2025